Advances in preclinical development play a crucial role in reducing cost for developing biosimilars.

By Anurag S. Rathore, Narendra Chirmule, and Himanshu Malani

Drug development is undergoing a transformation from multi-billion-dollar blockbusters to personalized medicines. More than 300 active biopharmaceutical product licenses in the market today bear witness to increasing interest in targeted treatments. With an anticipated valuation of over $200 billion by 2022, this popularity of protein-based biologics can be attributed to their unique target specificity in protein–protein interactions for the treatment of complex diseases such as cancer, hematological disorders, and autoimmune diseases (1).

The development of protein therapeutics, however, has a high degree of complexity in manufacturing because of the variable nature of protein expression in biological systems. Furthermore, developing this class of drugs is expensive and time consuming. Costs range from approximately $500 million to $3 billion, and development takes more than a decade, the majority of which is required for clinical trials (2,3). While effective and successful, biotherapeutics are presently marred by their high costs, which make their affordability poor for most of the world and thereby limit their accessibility.

The advent of biosimilars is an opportunity to address affordability. Development costs have been estimated to be reduced to approximately $50 to $300 million and development time to less than eight years (4). While considerably lower than the corresponding metrics for development of novel biotherapeutics, they are at least a log higher than those required for small-molecule generic molecules. Other challenges for biosimilars are frequent failures in demonstration of biosimilarity and establishment of clinical equivalence for efficacy, immunogenicity, and safety (5). There is a growing need to develop reliable, nonclinical approaches that faithfully represent clinical safety and efficacy. Exponential advances in new technologies and computing such as mathematical modeling of pharmacokinetics (PK) and pharmacodynamics (PD), organ-on-a-chip platforms, in-silico algorithms, and transgenic animal models are likely to significantly impact drug development in the decade to come (6). In this article, the authors explore the role that these technologies can play in reducing biosimilar development cost and time.

Limitations of clinical studies

Clinical trials have long served as the gold standard for evaluating safety and efficacy of drugs in a systematic manner using established, robust statistical processes that allow testing and validation of clinical hypothesis. For biosimilars, factors that significantly impact affordability and development time include: development cost, variability in product quality, and low success rates of trials.

Cost of biosimilar development

Development of biosimilars begins with cloning the appropriate host cells with the target gene. This is a multistep process involving upstream processes (e.g., bioreactor design), downstream processes (e.g., separation and purification technologies) for removal of impurities (e.g., extractables, leachates, host cell species, variants, and contaminants), and formulation (e.g., liquid/lyophilized/frozen product, stability) to obtain purified, concentrated product. Analytical and functional evaluation of the quality attributes of a biotherapeutic product is an integral part of process development (see Figure 1). This characterization is typically followed by preclinical studies for evaluating pharmacology (e.g., pharmacokinetic [PK], pharmacodynamic [PD], immunogenicity) and toxicology (e.g., small and large animal studies) to establish margins of safety and efficacy of the product. The final steps involve testing biosimilarity equivalence in clinical trials. A Phase I trial aims to assess safety, pharmacology (PK/PD), and initial safety and tolerability of the biosimilar. Because the therapeutic dose and clinical indications are established by the reference product, Phase II trials are typically not required for biosimilars. A Phase III trial is a confirmatory study for evaluating biosimilarity with respect to safety and PK/PD (7,8). In most cases, these confirmatory studies cannot be powered to test for efficacy (5). Post-approval monitoring of the performance of the product is accomplished via pharmacovigilance. Given this arduous journey from lab to market, it is no surprise that biologics demand significant investments, the burden of which ultimately falls on the patients.

Making biotherapeutics affordable is the holy grail for biosimilars. Recent advancements in technology and computational biology offer tools for development of accurate and predictive methods to understand the potential risks related to safety and efficacy. With the ever-growing therapeutic modalities (such as bi-functional molecule, antibody-drug conjugates, cell and gene therapies) (1) along with advanced analytical tools to measure primary, secondary, and tertiary structures (such as mass cytometry, mass spectrometry [MS], cryo-electron microscopy) and computational tools (9,10), there is a need for transformation of the traditional drug development process.

Variability in product quality

Variants. Biological processes are highly variable. Variability is intrinsic to the process of translation of proteins and manifests itself as variability in several attributes. The critical quality attributes of a product are defined as physical, chemical, biological, or microbiological properties or characteristics that should be within an appropriate limit, range, or distribution to ensure the desired product quality (11). For example, the glycosylation profile of antibodies made by a single Chinese hamster ovary (CHO) cell line will have several dozen forms of glycan-patterns. Each glycosylated variant of the antibody can have different biological effects with respect to efficacy and safety. Thus, the complexity of biologics makes it near-impossible to study the effect of each variation in clinical trials; surrogate methods based on in-silico and in-vitro determination need to be developed and validated to allow scientists to extrapolate the potential of a post-translational variant of a biotherapeutic product (such as aggregation, oxidation, and isomerization) on safety and efficacy (12,13).

Impurities. The manufacturing process itself serves as a producer of impurities and thus is a source of product heterogeneity. Characterizing and separating these impurities is essential for ensuring that product quality meets the stringent criteria for biotherapeutic products. A thorough analytical and functional evaluation of biosimilarity serves as the foundation for biosimilar development. It is expected that the manufacturer demonstrates lack of measurable differences in product, product-variant, and impurities between the biosimilar and the reference product. In case differences are identified, their impact needs to be investigated through a detailed structure-function analysis. Biosimilars are approved only when there is no “meaningful clinical impact” (14). Since the levels of variants and impurities are at extremely small levels (could be in ppm), it is difficult to purify the individual species to perform this analysis. Furthermore, adequate process controls to monitor and control product quality are necessary for receiving regulatory approval. Ultimately, this situation creates a burden on the manufacturer to invest resources toward product and process development and impacts the cost of development of the biosimilar (15).

Immunogenicity. Variability of immunogenicity induced by biosimilars and reference products can be observed only through clinical trials. Recent advances in immunogenicity prediction tools could be used to understand the potential differences (16). European Medicine Agency (EMA) biosimilar guidance provide recommendations for such predictive tools as supportive information for biosimilarity (17).

Thus, establishing biosimilarity requires an in-depth understanding of product and process variability, process controls, and development of specifications for manufacturing to ensure that product quality meets the expected standards, and also that the quality stays consistent from batch to batch. Other contributors to variability in manufacturing include source and qualification processes of raw materials and changes in manufacturing processes (e.g., scale, sites) (18). With the use of digital technologies for collecting, analyzing, and interpreting data, the potential of product variability on clinical safety or efficacy can be predicted and substantially reduced. These digitalization approaches can have a significant impact on reducing the dependence on clinical trials.

Failure rates of clinical trials Clinical studies often provide the final verdict on product safety and efficacy, and while this may also be true for biosimilars, it is not the most conclusive exercise. They unequivocally have a low failure rate compared to novel biologics, yet their standalone success is not sufficiently powered to detect clinical meaningful differences. A majority of switching studies for biosimilars have not reported any new adverse events, and similar experience has been reported by researchers all over the world (19). Such results fail to justify the financial burden of heavy clinical studies and undermine the need for analytical similarity. These failures indicate that there is an urgency for creating approaches based on predictive modeling to understand the relationships between biological pathways, the mechanism of disease pathogenesis, product quality, pharmacology, and toxicology.

Advances in preclinical development

Analytics

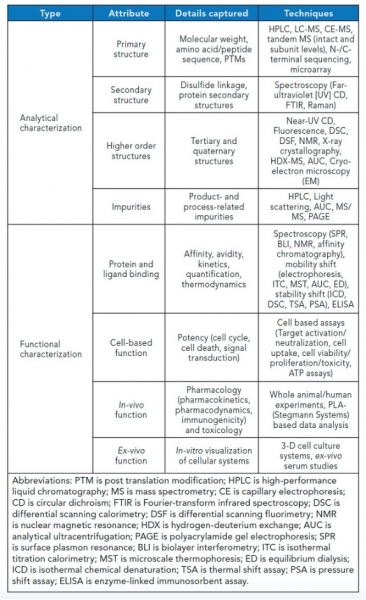

The complexity of biotherapeutic molecules, in particular monoclonal antibodies (mAbs), lends themselves to fascinating targets for analytical and functional characterization. The past decade has witnessed significant strides in our ability to characterize these molecules, via new tools and approaches to achieve high resolution, sensitivity, robustness, and specificity. Typical comparability exercises involve use of an arsenal of analytical and functional tools capable of characterizing various facets of these molecules (see Table I).

Table I. Analytical and functional tools capable of characterizing various facets of biotherapeutics (see note below the Table for abbreviations).

The task of similarity assessment is of critical importance for efficient development of biosimilars, with information being unravelled at multiple levels. Among all available analytical technologies, MS has seen the biggest jump in terms of depth and scope of investigation. It is a resourceful tool at different stages of biosimilar development, namely, clone selection (e.g., clonal proteome variations, product confirmation, sequence, glycosylation, residue modifications), process scaleup (e.g., batch variations, impurities, leachates), and clinical studies (e.g., serum analysis, tissue proteomics, biomarker discovery). The field has seen simultaneous advances on multiple forefronts of instrumentation, including ionizers, such as matrix-assisted laser desorption/ionization, electrospray ionization (ESI), and nano-ESI; mass analyzers such as quadrapole-time of flight (QTOF), triple quadrapole (QqQ), Fourier transform-ion cyclotron resonance (FTICR), Orbitrap, and ion-mobility; and fragmentation by electron-transfer dissociation (ETD) and electron-capture dissociation (ECD) compared to classic collision-induced dissociation (CID) and data acquisition (i.e., data-independent acquisition [DIA], such as alternating low-energy and high-energy collision induced dissociation-MS, sequential window acquisition of all theoretical spectra [SWATH]). Multi-attribute methodology (MAM), liquid chromatography (LC), and capillary electrophoresis coupling techniques allow simultaneous measurement of multiple product and process impurities in an automated, high-throughput mode. MS also offers an excellent orthogonal approach to traditional immunochemical assays for host cell protein identification and quantification in ppm levels using cutting-edge LC–MS sample preparation and analysis techniques. DIA strategies such as SWATH bypass the current limitations of limited low abundant peptides encountered in data- dependent acquisition using numerous smaller acquisition windows, thus increasing coverage. Rapidly growing databases and sequencing algorithms provide bioinformatics support to deconvolute experimental data. Another growing application in biologic characterization is the determination of higher order structures (HOS) using hydrogen-deuterium exchange and crosslinking chemistries coupled with MS. These methodologies elucidate 3-D conformation dynamics of proteins, thus enabling hotspot predictions for post-translation modifications (PTMs), sites, and extent of degradation (20).

Spectroscopic techniques have also advanced the understanding of structure–function relationships. State-of-the-art spectroscopic tools allow orthogonal assessment of secondary and tertiary structures. Raman, Fourier transform infrared, and circular dichroism (CD) spectroscopy are now being increasingly used for fingerprinting biomolecules using PTMs like glycosylation and stability parameters as barcodes. Near-infrared spectroscopic methods find application as process analytical tools in upstream and downstream processing of biotherapeutics, aiding in process control. Unique spectral signature of chemical compounds in the infrared spectrum allows for easier coding in HOS assessment. Thermal CD is used to probe the folding-unfolding profile of proteins, which is a critical component of stability studies for biosimilarity. Nano differential scanning fluorimetry (nano-DSF) has emerged as a high throughput platform for screening biotherapeutic formulations. Light scattering tools permit label-free size characterization, thus aiding in aggregation screening. While the above-mentioned techniques probe the relative 3D conformation of proteins, X-ray diffraction (XRD), nuclear magnetic resonance (NMR), and the more recent electron microscopy (EM) are being increasingly used to visualize proteins in their exact spatial positions. Complementary usage of these techniques includes aggregate analysis by EM, protein-ligand interactions using NMR, and structural variations studies upon sequence changes using XRD (21).

Functional characterization for structure-function relationship has also seen similar advances. Surface plasmon resonance (SPR), biolayer interferometry (BLI), and isothermal titration calorimetry (ITC) continue to enjoy popularity for kinetic estimation and quantification given their high throughput and label-free assessment methods. With new configurations and solid-state designs, SPR has become a workhorse in protein and nucleic acid applications. The sensor surfaces have been modified to allow cell-based binding to mimic real-time binding events. Cell-based binding in SPR and BLI takes into account the cell-surface expression of receptors, allowing label-free detection, binding, and saturation events. Recent advances in protein interaction studies include the use of BLI for high throughput formulation screening and stress prediction based on the binding profiles. ITC has been explored to allow for binding kinetic analysis along with conventional thermodynamic parameter estimation (22). This information will help hasten the drug design process. Potency assays represent another level of functional assessment and are prerequisite for proposing in-vivo experiments. Recent developments in 3D cellular systems have been used for candidate efficacy screening for anticancer drugs in an ex-vivo tumor microenvironment. Bioprinting cancer models may be the next big thing in safety and efficacy measurement that overcomes the difficulties of in-vivo preclinical xenograft studies (23).

Preclinical animal studies

Preclinical assessment usually involves data collection from at least two species: rodent (rat/mouse) and non-rodent species (dog/cat) or non-human primates (NHPs). Recent advancements in genetic engineering have led to the generation of transgenic mice that express the species-specific target of interest. A typical study for complex biologics, such as mAbs, includes the following components: assessment of acute toxicity (two-weeks to one month) via single- and multi-dose studies, including evaluation of analytical, absorption, distribution, metabolism, and excretion (ADME), PK–PD, immunogenicity, local tolerance, and safety attributes; chronic toxicity studies (three to 12 months); and reproductive toxicity studies when the indication for the biotherapeutic involves children and women of child-bearing age. These studies are designed at three levels: detection of drug in the newborn, PD effect of the drug in the newborn, and long-term (two-year) safety of the newborn. Most of these studies are not performed during biosimilar development, since these effects have been previously demonstrated with the reference product. The need for safety studies in animals ensures potential risks of adverse events of product variants and impurities. Immunogenicity assessment in animals does not predict immune responses in humans. When biosimilars use different formulations from the reference product, the biosimilars’ impact on safety and efficacy is required to be established via preclinical studies (24).

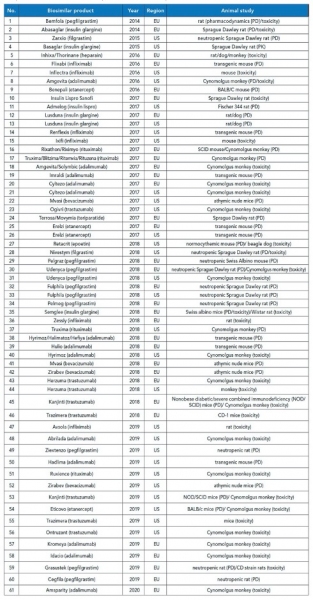

A review of biosimilars approved in the past five years suggests increasing use of rodents followed by NHPs for PK studies and mice for PD studies (25) (see Table II). When the target antigen of the biotherapeutic is not expressed in animals, transgenic and humanized chimeric mice are used as surrogates. Recent advances in gene editing technologies (e.g., CRISPR-Cas9, transcription activator-like effector nucleases, and zinc finger nucleases) have enabled generation of animal models for disease pathogenesis that can be used for assessment of pharmacology and toxicology. Thus, there exists immense scope in advancement of nonclinical studies as an alternative to clinical assessment (26,27).Animal models have limited potential in predicting immunogenicity of biotherapeutics in humans. However, evaluation of accurate exposure of the drug in animals to interpret the results of PK, PD, and safety studies requires assessment of immunogenicity. EMA regulations do not require animal immunogenicity studies, while FDA and several other regulatory agencies require preclinical results prior to initiating clinical trials (28).

Table II. List of approved biosimilars in the past five years (2014–2019) with the reported nonclinical animal models, compiled from various sources. EU is European Union.

Regulatory transformations

The regulatory landscape for biosimilars has seen many changes to requirements of preclinical and clinical subjects, reliance on analytical characterization studies, and the approval process. Despite harmonization of regulatory processes, there are several differences in the biosimilar regulatory processes of major regulatory agencies. The major hurdle is the requirement of biosimilarity with the local reference material. Concepts of “totality of evidence (ToE)” and “confirmation of sufficient likeness (CSL)” have been added and more recently edited from the regulatory guidelines (29,30).

Regulatory changes have affected the attitude toward animal subjects. The evolution of the 3R principle (reduction, refinement, and replacement) speaks volumes on efforts aimed at generating maximum data with an increased humane approach (31). Although more than 60 years old, this principle is today deeply embedded in all legislation and research methodologies. Recently, a fourth R has been proposed in a white paper by the Max Planck Society that talks about “responsibility” aimed at achieving the optimal trade-off between the need for animals in research and the obligation of protecting them (32). The paper discusses using global reference standards to avoid repetition of animal studies along with complete and sincere declaration of data and resources. Refinement focuses on humane practices of anaesthesia, euthanasia, and maximal use of non-invasive methods to avoid animal sacrifice as well as using appropriate statistical tools to organize the data. Replacement, on the other hand, proposes the use of modern in-vitro techniques, such as computer-based prediction models (e.g., physiologically based PK models), organ-on-a-chip platforms mimicking in-vivo systems (as a potential microphysiological organism), and the “omics” approach (e.g., genomics, proteomics) (33). Responsibility is inherently built in the other three Rs. In times to come, scientists may have to be ready to address difficult ethical issues in case of animal and human studies and weigh which demands a greater reduction as opposed to the other.

Summary

In this article, the authors have outlined steps in the development of affordable biosimilars by utilizing pre-clinical studies and reducing clinical trials, without impact to safety and efficacy. In the case of biosimilars, regulatory authorities require analytical similarity data before deciding on equivalence in clinical studies. Despite an enormous investment in clinical analysis, the results often fail to differentiate a biosimilar from the reference product in terms of efficacy or immunogenicity. Thus, from a socio-economic perspective, conducting preclinical in-vivo studies has the potential to make drugs suitable for the lower-cost economies. In recent years, with an exponential increase in technological and computational advances, the ability to understand, design, and develop biosimilars can be highly simplified. With use of “omics” tools, big-data analytics, bio-mathematical modeling, and in-silico and in-vitro tools, there can be a transformation in the preclinical development of biosimilars.

References

- G. Walsh, Nat. Biotechnol. 32(10), 992-1000 (2018).

- J.A. DiMasi, H.G. Grabowski, and R.W. Hansen, J. Health Econ., 47, 20-33 (2016).

- J. Chung, Exp. Mol. Med., 49 (3), e304-e304 (2017).

- F.K. Agbogbo, et al., J. Ind. Microbiol. Biotechnol., 46 (9-10) 1297-1311 (2019).

- F.X. Frapaise, BioDrugs, 32 (4) 319-324 (2018).

- M. Balls, J. Bailey, and R.D. Combes, Expert Opin Drug Metab Toxico, 15 (12) 985-987 (2019).

- A.S. Rathore, Trends Biotechnol., 27 (12) 698-705 (2009).

- L.M. Friedman, et al., “Introduction to Clinical Trials,” in Fundamentals of Clinical Trials, (Springer, Cham, Switzerland, 5th ed., 2015), pp. 1-23.

- D.J. Houde and S.A. Berkowitz, “Biophysical Characterization and its Role in the Biopharmaceutical Industry,” In Biophysical Characterization of Proteins in Developing Biopharmaceuticals, D.J. Houde and S.A. Berkowitz Eds. (Elsevier, 2nd ed., 2020), pp. 27-53

- K. Krawczyk, J. Dunbar, C.M. Deane, “Computational tools for aiding rational antibody design,” In Computational Protein Design, Methods in Molecular Biology¸ Ilan Samish Eds. (Humana Press, New York, NY, 2017), pp. 399-416

- ICH, Q8(R2) Pharmaceutical Development, Current step 4 (2009).

- A.S. Rathore, I.S. Krull, and S. Joshi, LCGC North Am. 36 (6) 376–384 (2018).

- W.C. Lamanna, et al., Expert Opin. Biol. Ther. 18 (4) 369-379 (2018).

- FDA, Biosimilar Development, Review, and Approval (Rockville, MD, 2017).

- J. Geigert, “Complex Process-Related Impurity Profiles,” In The Challenge of CMC Regulatory Compliance for Biopharmaceuticals (Springer, Cham, Switzerland, 3rd ed., 2019), pp. 231-260

- J. Gokemeijer, V. Jawa, and S. Mitra-Kaushik, AAPS J. 19 (6) 1587-1592 (2017).

- European Medicines Agency, Guideline on Immunogenicity Assessment of Therapeutic Proteins (2017).

- A.S. Rathore, D. Kumar, and N. Kateja, Curr. Opin. Biotechnol. 53, 99-105 (2018).

- H.P. Cohen, et al., Drugs 78 (4) 463-478 (2018).

- V. Háda, et al., J. Pharm. Biomed. Anal. 161, 214-238 (2018)

- A.S. Rathore, I.S. Krull, and S. Joshi, LCGC North Am. 36 (11) 814-822 (2018).

- V. Kairys, et al., Expert Opin. Drug Discov. 14 (8) 755-768 (2019).

- B. Majumder, et al., Nat. Commun. 6 (1) 1-14 (2015).

- ICH, S6 Preclinical Safety Evaluation of Biotechnology-Derived Pharmaceuticals (2011).

- P. Pipalava, et al., Regul. Toxicol. Pharmacol. 107, 104415 (2019).

- L.M. Chaible, et al., “Genetically Modified Animal Models,” In Animal Models for the Study of Human Disease, P. Michael Conn, Eds. (Academic Press, 2nd ed. 2017), pp. 703-726.

- T.M. Monticello, et al., Toxicol. Appl. Pharmacol. 334, 100-109 (2017).

- L.A. van Aerts, et al., MAbs 6 (5) 1155-1162 (2014).

- A.S. Rathore and A. Bhargava, Regul. Toxicol. Pharmacol. 110, p.104525 (2020).

- C.J. Webster, A.C. Wong, and G.R. Woollett, BioDrugs 33 (6) 603-611 (2019).

- W.S. Stokes, Hum. Exp. Toxicol. 34 (12) 1297-1303 (2015).

- W. Singer, et al., “Animal Research in the Max Planck Society,” White Paper (2016).

- T. Heinonen and C. Verfaillie, “The Development and Application of Key Technologies and Tools,” In The History of Alternative Test Methods in Toxicology, M. Balls, R. Combes, and A. Worth, Eds. (Academic Press, 1st ed., 2015), pp. 265-278.

About the authors

Anurag S. Rathore, PhD* is professor in the Department of Chemical Engineering and coordinator, DBT Center of Excellence for Biopharmaceutical Technology, at the Indian Institute of Technology, Delhi, Hauz Khas, New Delhi, 110016, India, asrathore@biotechcmz.com, Phone: +91-11-26591098. Narendra Chirmule, PhD, is CEO at SymphonyTech Biologics. Himanshu Malani is a graduate student at the Indian Institute of Technology, Delhi.

*To whom all correspondence should be addressed.